Empower your team with Safe,

Smart AI Adoption.

Discover Shadow AI, enforce security guardrails, and govern AI adoption.

Enterprise AI governance from one platform.

Backed by the best

See what Ai tools your team is using and how

Secure your data with policy-driven guardrails

Engage your team with just-in-time AI training

Why choose Aona for Enterprise AI Adoption?

AI is rapidly integrating into workplaces, but many teams lack the necessary support and oversight. Aona provides the tools to ensure your team adopts AI effectively and responsibly.

0%

of Generative AI Adopters use unapproved tools at work.

Salesforce, AI Study 2024

0%

have entered non-public information about the company.

Cisco 2024, Data Privacy Benchmark Study

0%

of professionals feel unprepared for AI, hindering productivity.

Economic Times, 2025

VIEW

Unified AI Usage Analytics

See all AI tool usage across your enterprise – from Microsoft Copilot to ChatGPT including Shadow AI activity.

Identify which teams are innovating, where silos exist, and uncover hidden adoption patterns

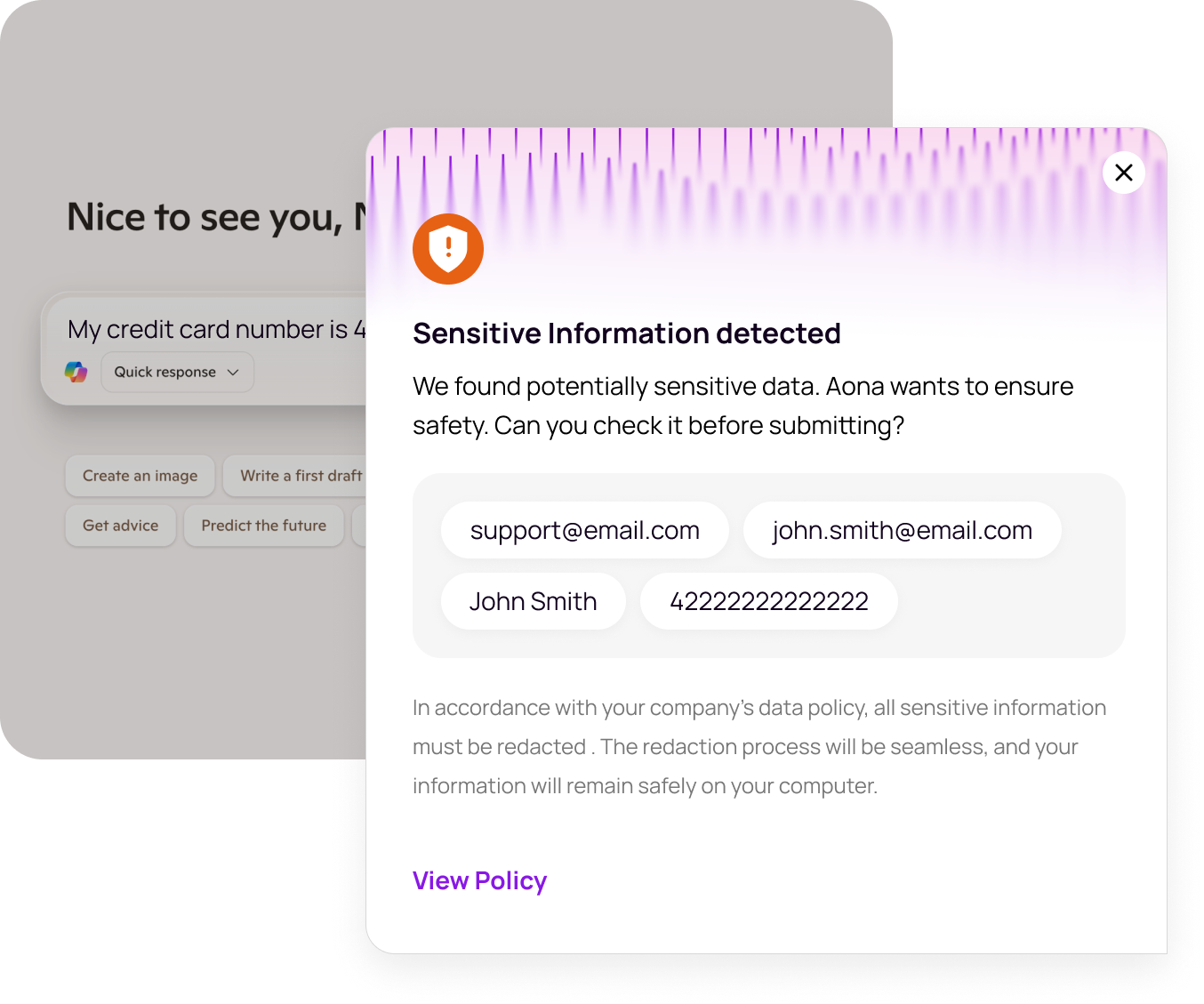

SECURE

AI Safety Guardrails

Ensure AI safety and compliance with automatic data redaction and policy enforcement. Our guardrails block sensitive information (PII, IP, confidential data) from being sent to external AI tools and guide users toward secure practices.

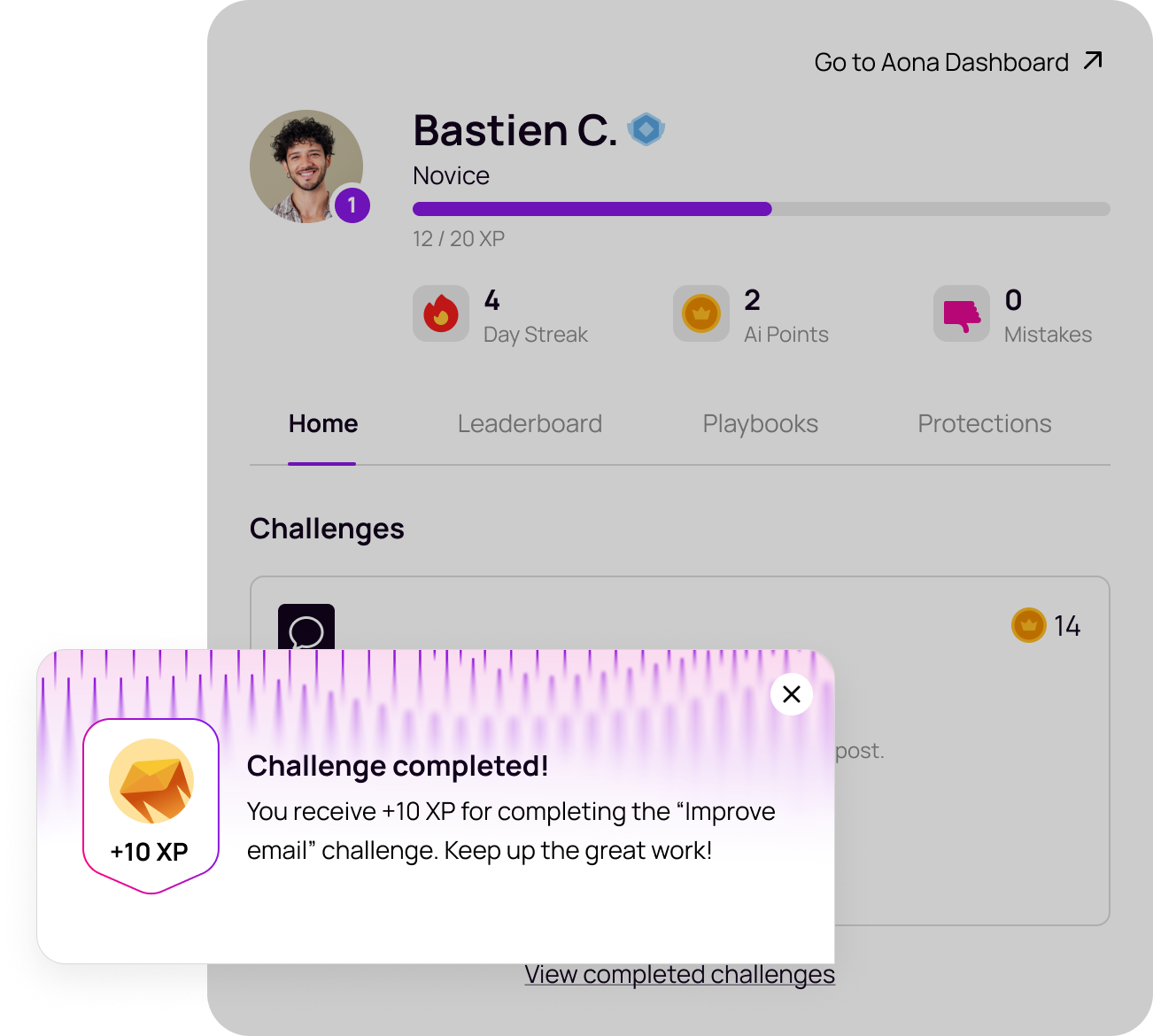

ENGAGE

Real-Time AI Coach

Equip employees with an AI coach that provides instant guidance and best practices as they work.

This virtual coach offers tips, suggests safe alternatives, and answers questions, helping staff use AI effectively and ethically in their day-to-day tasks.

INTEGRATION

Seamless Integration for

Comprehensive Data Protection

Implement enterprise-grade AI data protection with Aona AI. Our solution ensures secure data flow through powerful APIs, whether deployed on-premises or in the cloud. Consolidate AI analytics for enhanced security and observability, safeguarding your AI environment with the best generative AI data protection solutions.

TESTIMONIALS

What our clients say

Aona AI gave us visibility and control over AI use across our teams, helping us stay secure while embracing innovation.

Mining

Senior Product Manager

Aona stands out for its AI security expertise and vision. A trusted partner helping us govern and secure our AI future.

Law firm

Director of AI Operations

Aona AI delivers unmatched AI-driven threat detection, tackling evolving cybersecurity challenges with precision and vision.

Cybersecurity Provider

Chief Security Officer

Aona AI leads in AI governance and security—helping us protect data, ensure visibility, and build a trusted AI future.

Law firm

Chief Information Officer

Aona AI empowered us to innovate with confidence by protecting our IP, ensuring privacy, and unlocking AI’s full potential.

Investment firm

Director

A clever solution to facilitate the adoption of GenAI across enterprises securely while providing granular controls and oversight.

Managed Service Provider

Partner, Innovation

Aona AI gave us visibility and control over AI use across our teams, helping us stay secure while embracing innovation.

Mining

Senior Product Manager

Ready to transform your workforce with responsible AI?

Boost productivity with safe, trusted AI.

Our latest blogs

Explore our latest insights and updates on secure AI integration and data protection.

Salim Sebkhi | January 17, 2026

AI Adoption in 2026 (Part 1 ) - What Last Year's Transformation Reveals About Your Next Move

Bastien Cabirou | December 2, 2025

Agentic AI in 2025: What It Is, Why It’s Risky, and How We Use It Without Burning the House Down

Bastien Cabirou | October 29, 2025

Top AI Security Threat Predictions for Australia in 2026

Salim Sebkhi | January 17, 2026

AI Adoption in 2026 (Part 1 ) - What Last Year's Transformation Reveals About Your Next Move

Bastien Cabirou | December 2, 2025

Agentic AI in 2025: What It Is, Why It’s Risky, and How We Use It Without Burning the House Down

Bastien Cabirou | October 29, 2025

Top AI Security Threat Predictions for Australia in 2026

Frequently Asked

Questions

Our support team is dedicated to helping you navigate the AI transformation journey with Aona AI. If the questions listed here don't fully address your inquiries, please don't hesitate to get in touch. We're here to ensure your experience with our platform is seamless, secure, and satisfactory.

Empowering businesses with safe, secure, and responsible AI adoption through comprehensive monitoring, guardrails, and training solutions.

Quick Links

Others

Socials

Contact

Level 1/477 Pitt St, Haymarket NSW 2000

contact@aona.ai

Copyright ©. Aona AI. All Rights Reserved